Building a RAG-Based Chatbot with LLMs and PDF Data: The Future of AI-Powered Assistance

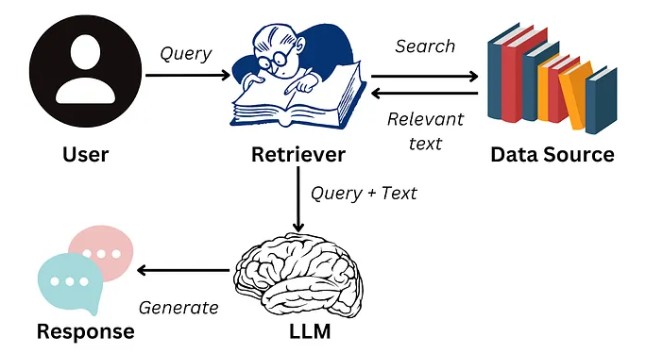

Retrieval-Augmented Generation (RAG) is transforming chatbot development by integrating the power of large language models (LLMs) with external knowledge retrieval. Unlike traditional chatbots that rely solely on pre-trained data, RAG-based models dynamically fetch relevant information from sources like PDFs, databases, and APIs before generating responses. This ensures that users receive accurate and contextually relevant answers rather than relying on outdated or static knowledge. By leveraging embedding models and vector databases, RAG efficiently retrieves and processes PDF documents, making it a powerful tool for industries like legal, healthcare, and academia, where accessing and summarizing vast amounts of textual data is crucial.

The best way to predict the future is to create it.

by Peter DruckerThe process of building a RAG-based chatbot involves multiple components, including document parsing, embedding generation, and retrieval mechanisms. Tools like LangChain and FAISS (Facebook AI Similarity Search) help developers convert PDF text into vector representations, allowing the LLM to retrieve and synthesize the most relevant content. OpenAI’s GPT models, LLaMA, and other transformer-based architectures serve as the backbone for natural language understanding, enabling contextual responses based on the retrieved data. This approach significantly improves chatbot accuracy, reducing hallucinations by grounding responses in real, verifiable documents. Moreover, fine-tuning the chatbot with domain-specific data enhances its ability to provide expert-level assistance.

Despite remarkable progress, challenges remain, such as handling occlusions, varying lighting conditions, and real-world complexities. Researchers are actively exploring self-supervised learning and generative AI to improve object recognition in diverse environments. Additionally, ethical concerns surrounding privacy and bias in AI models highlight the need for responsible development. The future of image segmentation and object detection is promising, with advancements in explainable AI and edge computing enabling real-time analysis on devices like smartphones and IoT sensors. As AI continues to evolve, these technologies will become even more integral to our daily lives, transforming industries and shaping the future of visual intelligence.

Despite its advantages, RAG-based chatbot development presents challenges such as managing large-scale document indexing, handling ambiguous queries, and optimizing retrieval efficiency. Solutions like hybrid search (combining keyword and vector search) and prompt engineering are being explored to refine response quality. As AI continues to advance, integrating multimodal capabilities—such as extracting insights from scanned PDFs and images—will further enhance chatbot functionality. The future of RAG-powered chatbots lies in their ability to deliver knowledge-driven, context-aware interactions, bridging the gap between human expertise and AI automation.